Revolutionizing AI Image Generation with ControlNET

Written on

Chapter 1: Introduction to ControlNET

ControlNET marks a significant breakthrough in the realm of AI-generated imagery. A recent publication has unveiled new possibilities in AI image and video creation, enabling users to manipulate diffusion models using sketches, outlines, depth maps, or human poses. This advancement pushes the boundaries of creative control and customization in design.

Section 1.1: Achieving Control

The remarkable aspect of ControlNET lies in its solution for spatial consistency issues. Previously, it was challenging for AI models to identify which sections of an input image should be preserved. ControlNET changes this narrative by introducing a methodology that allows Stable Diffusion models to utilize additional input parameters, directing the model's actions with precision. As Reddit user IWearSkin aptly noted:

Section 1.2: Showcasing ControlNet's Features

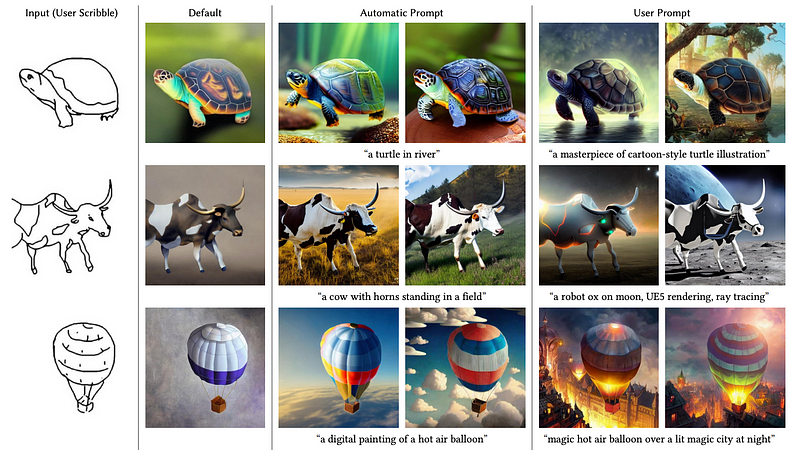

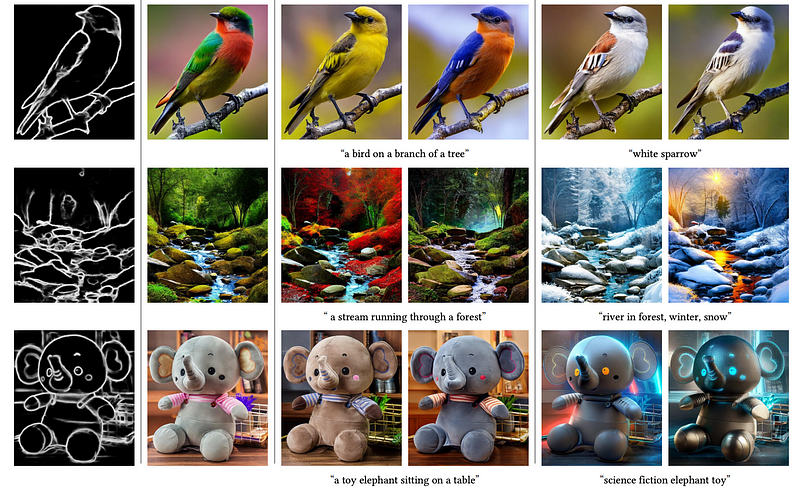

To illustrate the power of ControlNET, a variety of pre-trained models have been released. These models exhibit control over image-to-image generation through different input conditions, such as edge detection, depth analysis, sketch processing, and human poses.

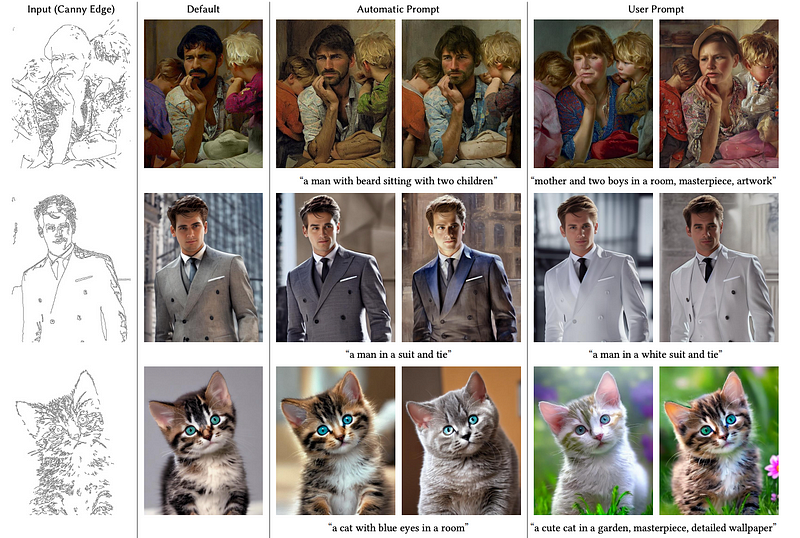

Subsection 1.2.1: Canny Edge Model

For instance, the Canny edge model employs an edge detection algorithm to extract a Canny edge image from a designated input image and subsequently utilizes both for advanced diffusion-based image generation:

Section 1.3: HED Model and Pose Detection

Similarly, ControlNET's HED model demonstrates effective control over an input image via HED boundary detection:

Another feature is ControlNET’s pose detection model:

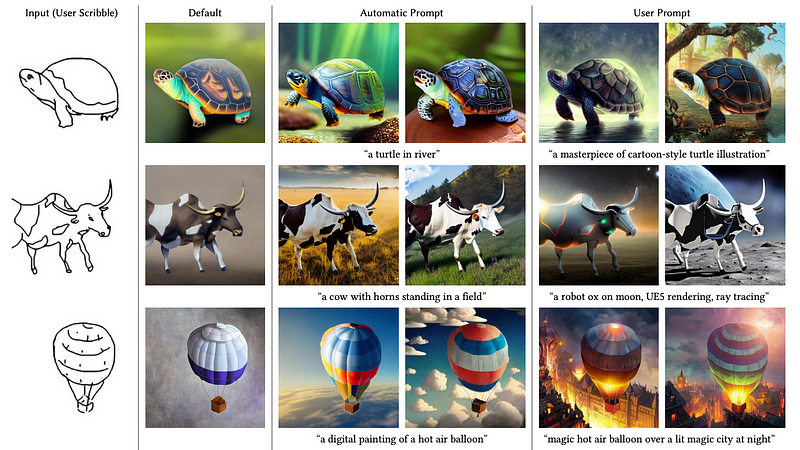

Chapter 2: Enhancements in Sketch Processing

ControlNET's Scribble model enhances the capabilities of sketch-based diffusion, providing even more control and creativity:

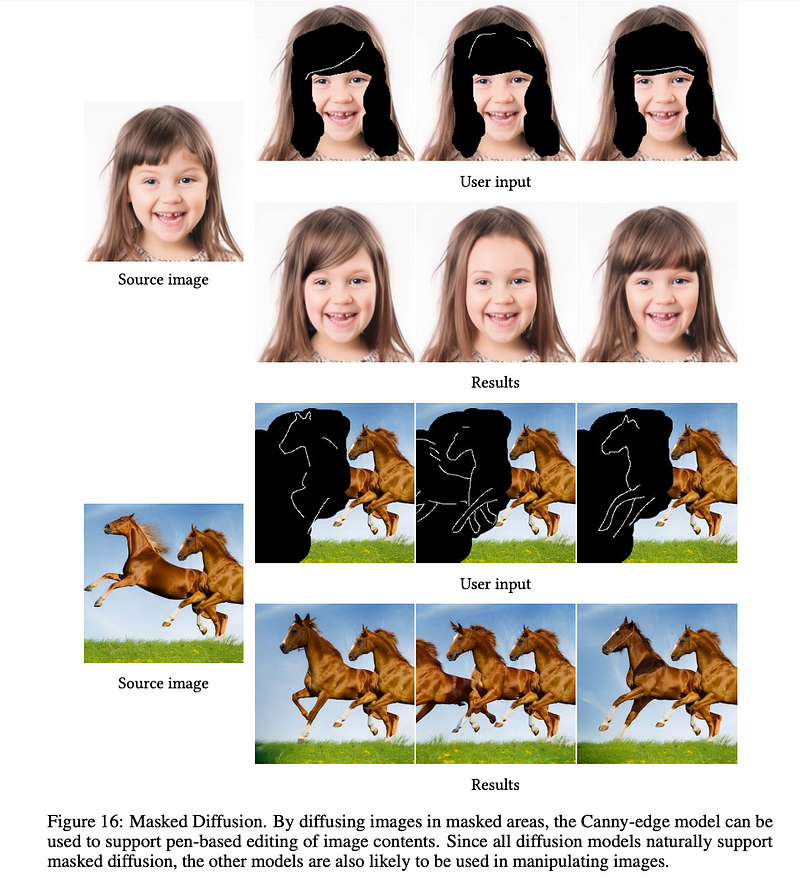

Moreover, ControlNET is compatible with Stable Diffusion’s default masked diffusion. For instance, the Canny Edge model can assist in manual image editing and manipulation:

These examples illustrate just a fraction of the models featured in the original research paper, which have already inspired the creation of new toolkits for artists and designers. Notably, ControlNET has effectively resolved the issue of "strange hands" in generated imagery.

With the challenge of spatial consistency addressed, we can anticipate further advancements in temporal consistency and AI-driven cinematography!

Thank you for reading! If you enjoyed this article, consider following my referral link to join the Medium community for unlimited access to my articles and countless others! You can also connect with me on Twitter and leave some claps here!