A Comprehensive Overview of Generative AI for Beginners

Written on

Understanding Generative AI

Generative AI has garnered significant attention in recent months, becoming a prominent subfield within Artificial Intelligence (AI). Tools such as ChatGPT have become widely recognized, transforming how we approach everyday tasks across various professions, including programming.

Terms like "DALL-E," "ChatGPT," and "Generative AI" have permeated social media, professional discussions, and general conversations. It seems that everyone is engaging with these concepts.

But what exactly is generative AI, and how does it differ from traditional AI?

This article aims to clarify the overarching concepts of generative AI. If you've found yourself in discussions about it but still feel uncertain, you're in the right place. Here, we will focus on providing a straightforward explanation without delving into complex coding, covering key ideas succinctly. Specifically, we will explore Large Language Models and Image Generation Models.

Here's a brief outline of what you'll learn:

- Defining generative AI and its distinction from traditional AI

- Exploring Large Language Models

- Understanding image generation

Defining Generative AI

Generative AI refers to a subset of AI focused on developing algorithms capable of producing new data, such as images, text, code, and music. The key distinction between generative AI and traditional AI lies in its ability to create new data derived from its training datasets. Furthermore, generative AI can handle various data types that traditional AI struggles with.

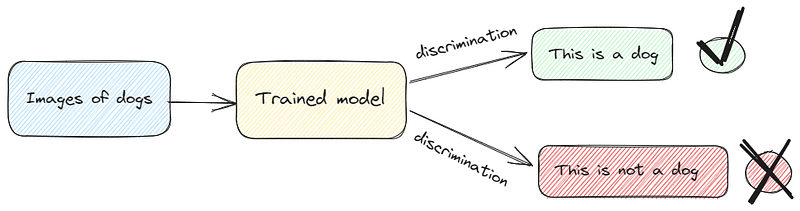

To elaborate technically, traditional AI is often categorized as discriminative AI, where machine learning models are trained to make predictions or classifications on previously unseen data. These models typically work with numerical data and, in some cases, text (as seen in Natural Language Processing).

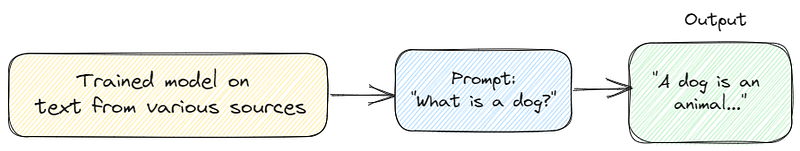

In contrast, generative AI involves training a machine learning model that generates outputs that resemble its training data. These models can operate with diverse data types, including text, images, and audio.

Visualizing the Processes

In traditional AI, we train the model on existing data, after which it can classify or predict outcomes based on new, unseen inputs. For instance, if we train a model to recognize dogs in images, it will classify new pictures as dogs or not based on its training.

Conversely, generative AI models are trained on extensive datasets from various sources. When presented with a user prompt (a natural language query), the model generates an output that aligns with its training. For example, if the model has been trained on text data about dogs, it can describe what a dog is when queried by a user.

Large Language Models

Now, let's delve into the various subfields of generative AI, beginning with Large Language Models (LLMs). According to Wikipedia, an LLM is:

"A computerized language model consisting of an artificial neural network with many parameters (tens of millions to billions), trained on large quantities of unlabeled text using self-supervised learning or semi-supervised learning."

Though the term lacks a formal definition, it typically refers to deep learning models with millions or even billions of parameters, pre-trained on substantial text corpora.

LLMs are deep learning models trained on vast text datasets, enabling them to tackle various language-related challenges, including:

- Text classification

- Question and answering

- Document summarization

- Text generation

Unlike standard machine learning models, LLMs can be trained for multiple tasks simultaneously.

When it comes to prompting LLMs, two concepts are crucial: prompt design and prompt engineering.

Prompt design involves crafting a specific prompt tailored for a particular task. For example, if you wish to translate text from English to Italian, your prompt must clearly indicate that.

Prompt engineering, on the other hand, refers to enhancing the effectiveness of LLMs by incorporating domain knowledge and specific details into prompts, such as keywords, context, and examples.

There are three primary types of LLMs:

- Generic Language Models: These models predict words or phrases based on language patterns in the training data (e.g., email auto-completion).

- Instruction Tuned Models: These are designed to respond to specific instructions (e.g., summarizing text).

- Dialog Tuned Models: These engage in conversations with users, using prior responses to inform subsequent ones (e.g., AI chatbots).

It's worth noting that many distributed models feature a mix of these characteristics. For instance, ChatGPT can summarize text and engage in dialogue, showcasing attributes of both Instruction Tuned and Dialog Tuned Models.

Image Generation

Image generation has been a field of interest for some time, although its popularity surged recently with tools like "DALL-E" and "stable diffusion," making this technology widely accessible.

Image generation can be categorized into four main types:

- Variational Autoencoders (VAEs): These models encode images into a compressed format and decode them back to their original size while learning the underlying data distribution.

- Generative Adversarial Networks (GANs): These frameworks consist of two neural networks that compete against each other, where one generates images while the other evaluates their authenticity.

- Autoregressive Models: These models treat images as sequences of pixels and generate images accordingly.

- Diffusion Models: Inspired by thermodynamics, these models are currently among the most promising in image generation.

The operational process of diffusion models involves two steps: a forward distribution process that adds noise to an image until it becomes unrecognizable, followed by a reverse diffusion process that restores the image's original structure by "de-noising" the pixels.

Combining Forces

If you've followed along, you may wonder, "How do tools like DALL-E generate images based on prompts?" While we've discussed the mechanics of how these models learn, the true power emerges when LLMs are paired with image generation models. This combination allows for the use of natural language prompts to generate images that align with user requests.

Isn’t that an incredible capability?

Conclusion

In summary, generative AI represents a subset of AI focused on producing new data that resembles the training data. While LLMs generate text and image generation models create visuals, the real strength of generative AI lies in the synergy between these two types, enabling the creation of images in response to natural language prompts.

NOTE: This article draws inspiration from the Generative AI course offered by Google. For further learning on generative AI, consider enrolling in the course.

Federico Trotta

Hello, I'm Federico Trotta, a freelance technical writer. If you're interested in collaboration, feel free to reach out.

This video provides an introduction to generative AI, explaining its significance and applications in various fields.

In this video, you’ll find a gentle introduction to Google Bard and generative AI, simplifying complex concepts for beginners.